This is the second article in the series where we read Campfire's source code to understand and extract useful techniques, practices and patterns. In today's article, we'll learn how you can play sounds in your Rails application using the Audio API.

Check out the first article here:

Before we begin, you should know that playing sounds on a website is pretty simple, all you have to do is this:

const sound = new Audio("/audio.mp3")

sound.play()What's more interesting is how Campfire uses the Audio API combined with Stimulus and Rails to accomplish this:

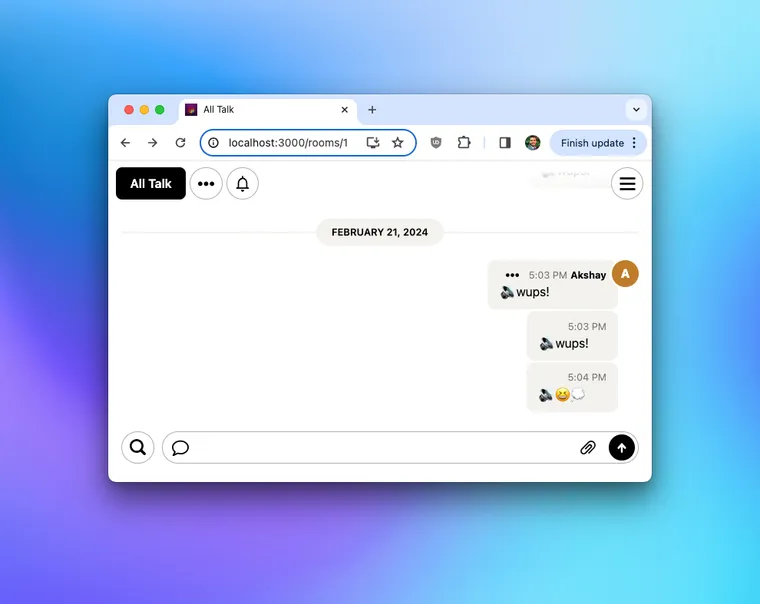

And that's what we'll explore in this post. How Campfire parses the user input to render the audio element on the screen, which plays the sound once when posted, and anytime later when clicked.

Sounds exciting? Let's dive in.

Let's start from the very beginning. To play a sound, the user has to type /play followed by the name of the sound, e.g. play bezos and hit enter.

We'll skip the part where a controller intercepts that request, saves the message in the database, because that's standard Rails 101. Let's see what happens when the controller saves that message and displays it via a _message partial, which renders message presentation via a presentation partial.

# app/views/messages/_message.html.erb

<%= render "messages/presentation", message: message %>

# app/views/messages/_presentation.html.erb

<%= message_presentation(message) %>The templates and partials are not this small, by the way. I've removed all the extra tags and code that's not relevant to displaying a message.

The message_presentation function is defined in... you guessed it, the MessagesHelper module.

# app/helpers/messages_helper.rb

def message_presentation(message)

case message.content_type

when "attachment"

# ...

when "sound"

message_sound_presentation(message)

else

# ...

end

endThe message_sound_presentation function is defined in the same helper and it generates the HTML containing Stimulus controller, URL to the sound asset, and action to play the sound.

# app/helpers/messages_helper.rb

def message_sound_presentation(message)

sound = message.sound

tag.div class: "sound", data: { controller: "sound", action: "messages:play->sound#play", sound_url_value: asset_path(sound.asset_path) } do

play_button + (sound.image ? sound_image_tag(sound.image) : sound.text)

end

endLet's see how Campfire finds the sound in the first statement, sound = message.sound. The sound method is defined on the Message model and it parses the message body /play bezos, and returns an instance of the Sound for with the matching name, bezos.

# app/models/message.rb

class Message < ApplicationRecord

def sound

plain_text_body.match(/\A\/play (?<name>\w+)\z/) do |match|

Sound.find_by_name match[:name]

end

end

endIt's also important to note the asset_path(sound.asset_path) helper which computes the asset path. Sound is a plain Ruby model in Campfire:

# app/models/sound.rb (simplified)

class Sound

attr_reader :asset_path

def initialize(name)

@name = name

@asset_path = "#{name}.mp3"

end

endBy the way, the .mp3 files are stored under app/assets/sounds directory.

The message_sound_presentation helper above returns the following HTML:

<div class="sound" data-controller="sound" data-action="messages:play->sound#play" data-sound-url-value="/assets/wups-fbad935c.mp3">

<button class="btn btn--plain" data-action="sound#play">

🔊

</button>

wups!

</div>If you're not familiar with Stimulus, I suggest you check out my articles on Stimulus. A couple of interesting things to note:

- the message is wrapped in a

data-controller="sound"attribute which means thesound_controller.jsclass contains the logic to play the sound. - the

data-sound-url-valuecontains the location of the sound. This value can be accessed in thesound_controller.jsclass in thethis.urlValueproperty. - the

data-actionon the inner HTML button instructs Stimulus to call theplay()method on thesound_controller.jsclass whenever the user clicks this button, - the

data-actionon outer div instructs Stimulus to listen for theplayevent on themessages_controller.jsclass and call theplay()method on thesound_controller.jsclass (we'll come back to it later).

The sounds_controller.js is a very simple Stimulus controller class that plays the sound provided via urlValue.

import { Controller } from "@hotwired/stimulus"

export default class extends Controller {

static values = { "url": String }

play() {

const sound = new Audio(this.urlValue)

sound.play()

}

}

So far, so good. The controller plays the sound when you click the icon button.

However, remember that Campfire also needs to play the sound when the message is displayed the very first time. How does that work? That's where the play event in the outer HTML comes into play.

The Campfire messages are displayed in a Stimulus controller with the following action.

"turbo:before-stream-render@document->messages#beforeStreamRender"The before-stream-render event is fired right before Turbo Stream renders a page update containing the message. It calls the beforeStreamRender method on the messages_controller class.

// app/javascript/controllers/messages_controller.js

export default class extends Controller {

async beforeStreamRender(event) {

// ...

this.#playSoundForLastMessage()

// ...

}

#playSoundForLastMessage() {

const soundTarget = this.#lastMessage.querySelector(".sound")

if (soundTarget) {

this.dispatch("play", { target: soundTarget })

}

}

}The playSoundForLastMessage dispatches the play event, which is listened by the message_sound_presentation listener above, and then it calls the sound#play method, making the sound.

def message_sound_presentation(message)

tag.div class: "sound", data: { action: "messages:play->sound#play" } do

# ...

end

endThat's a wrap. I hope you found this article helpful and you learned something new.

As always, if you have any questions or feedback, didn't understand something, or found a mistake, please leave a comment below or send me an email. I reply to all emails I get from developers, and I look forward to hearing from you.

If you'd like to receive future articles directly in your email, please subscribe to my blog. If you're already a subscriber, thank you.